Introduction to HPC Systems

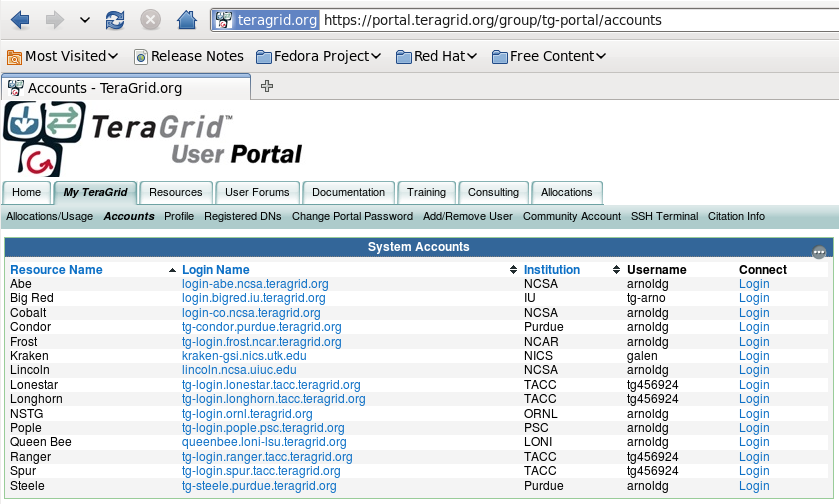

Presented by Galen ArnoldThe portal.teragrid.org page shows your list of possible hosts under My Teragrid -> Accounts.

Security starts with you. We recommend using a password management package such as Keepass

Example ssh sessions from a local site system to various TeraGrid login hosts:[arnoldg@co-login ~]$ myproxy-logon -s myproxy.ncsa.uiuc.edu Enter MyProxy pass phrase: A credential has been received for user arnoldg in /tmp/x509up_u25114. [arnoldg@co-login ~]$ ssh tg-login.ranger.tacc.teragrid.org hostname login3.ranger.tacc.utexas.edu [arnoldg@co-login ~]$ ssh tg-login.lonestar.tacc.teragrid.org hostname lslogin2 [arnoldg@co-login ~]$ ssh login.bigred.iu.teragrid.org hostname s10c2b6 [arnoldg@co-login ~]$ ssh queenbee.loni-lsu.teragrid.org hostname qb1 [arnoldg@co-login ~]$ ssh tg-steele.purdue.teragrid.org hostname tg-steele.rcac.purdue.edu [arnoldg@co-login ~]$Consult local site documentation for details about:

- compiling code

- batch system

- filesystem policies

- technical specifications for the system

Lonestar User Guide

Kraken User Guide

The Big Red Cluster

Queen Bee User Guide

Purdue Steele User Guide

PSC resources

NCAR Bluefire User Guide

Batch system specifics

Loni, Queenbee#LONI queues #1.Use the queue named: workq #PBS ?q workq #2.Use no more than 4 nodes, always use 8 processes per node. Yields 32 cores per job: #PBS -l nodes=4:ppn=8 #3.Use no more than 1hr of wall clock time per job request. #PBS ?l wallclock=1:0:0IU, Bigred

#@ class = NORMAL #@ account_no = TG-TRA900337NPurdue, Steele

#PBS -q standby #PBS -A TG-TRA100001S #PBS -l wallclock=00:15:00Tacc, Lonestar or Ranger

qsub -q developmentKraken

#PBS -q debug

Filesystems

[arnoldg@co-login ~]$ ssh tg-login.ranger.tacc.teragrid.org "tg-policy -data" Site Data Policy: ----------------- The TACC HPC platforms have several different file systems with distinct storage characteristics. There are predefined, user-owned directories in these file systems for users to store their data. Of course, these file systems are shared with other users, so they are managed by either a quota limit, a purge policy (time-residency) limit, or a migration policy. Home directory The system automatically changes to a user's home directory at login and this is the recommend location to store your source codes and build your executables. $HOME are quota limited. Use to quota command to determine your quota. Use $HOME to reference your home directory in scripts. Use cd to change to $HOME. Work directory Store large files and perform most of your job runs from this file system. This file system is accessible from all the nodes. Work files are subject to a 7 day purge policy. Use $WORK to reference your work directory in scripts. Use cdw to change to $WORK. Scratch or Temporary directory Directory on each node where you can store files and perform local I/O for the duration of a batch job. Often, in batch jobs it is more efficient to use and store files directly on $WORK (to avoid moving files from scratch at the end of a job). Available for the duration of a job. Use $SCRATCH to reference a temporary directory in scripts. San directory The SAN directory is available on login nodes (front-ends) of Lonestar (and Longhorn). Space on the SAN is an allocatable resource; that is, space is not automatically allocated to a project, the Principal Investigator must request space on this file system. Archive directory Store permanent files here. This file system has "archive" characteristics (See TACC Lonestar User Guide.) The access speed is low relative to the work directory. Use $ARCHIVE to reference your archive directory in scripts. Use cda to change to $ARCHIVE. Home directories are backed up daily for protection agains catastrophic events. Daily management of data files therefore is the user's responsibility. Scratch areas and parallel filesystems are not backed up and data storage for any length of time beyond the purge period is not guaranteed. [arnoldg@co-login ~]$

Moving Data

- ssh methods: , scp , sftp , sshfs # good for small number of files, modest data size < 1GB

- FileZilla # good for large numbers of files, larger data

- Tweak this setting Maximum Simultaneous Transfers

- grid-ftp programs: uberftp, globus-url-copy

File Transfer Performance Example ?( from ci-tutor.ncsa.uiuc.edu )

Transferring files can take a significant time, even when done with optimal tuning. We provide the following examples to compare and demonstrate transfer performance using the methods we have discussed.Comparing Transfer Methods between TeraGrid Resources

This example compares the performance of using various methods to transfer a 4GB file between Cobalt at NCSA and Ranger at TACC.First, let's take a look at performance with no tuning using SCP:

$ time scp test.tar ranger.tacc.teragrid.org:test.tar 100% 4124MB 8.3MB/s 08:19 452.052u 20.476s 8:20.72 94.3% 0+0k 0+0io 39pf+0wFrom the output we see that it took approximately eight minutes to transfer 4GBs. That is not impressive performance so let's see if Grid-FTP capable tools can improve on that. In our examples, we use `pwd`, a Unix idiom that expands the current directory in place, with the source URLs. It's a convenient shorthand to use with commands that require full pathnames.

$

First, we obtain a certificate proxy because uberftp and globus-url-copy require proxy authentication.

$ myproxy-logon -s myproxy Enter MyProxy pass phrase: XXXXXXXXXXXXXXXX A credential has been received for user arnoldg in /tmp/x509up_u25114. $ grid-proxy-info grid-proxy-info subject : /C=US/O=National Center for Supercomputing Applications/CN=Galen Arnold issuer : /C=US/O=National Center for Supercomputing Applications/OU=Certificate Authorities/CN=MyProxy identity : /C=US/O=National Center for Supercomputing Applications/CN=Galen Arnold type : end entity credential strength : 1024 bits path : /tmp/x509up_u25114 timeleft : 11:59:26Now that we have our certificate proxy we'll try uberftp without any tuning options for a baseline:

$ time uberftp \ gsiftp://grid-co.ncsa.teragrid.org/`pwd`/test.tar \ gsiftp://gridftp.ranger.tacc.teragrid.org/share/home/00017/tg456924 21.020u 45.372s 1:07.85 97.8% 0+0k 0+0io 0pf+0w $The transfer time dropped from eight minutes to a little over one minute, so there's good reason to use something other than scp for large files or tar bundles.

Next we add some tuning options to uberftp for parallel striping and tcp buffer size which yields:

$ time uberftp -parallel 8 -tcpbuf 8000000 gsiftp://grid-co.ncsa.teragrid.org/`pwd`/test.tar \ gsiftp://gridftp.ranger.tacc.teragrid.org/share/home/00017/tg456924 15.080u 26.940s 0:43.60 96.3% 0+0k 0+0io 7pf+0w $The transfer time of about 44 seconds is more than an order of magnitude improvement over the time required to scp the file. If the data to be moved were hundreds of gigabytes or a few terabytes, selecting the proper transfer method could make the difference between minutes and hours or days.